Understanding and addressing performance issues for .Net Core Lambda functions

The Problem

One of the biggest barriers to the adoption of serverless is the cold start performance hit. There are many blog posts about AWS Lambda cold start performance and how to improve it. In this blog post I want to raise a related issue that is specific to .NET Lambdas, which I have labelled “lukewarm starts”.

The cold start time of any Lambda has a number of factors. Downloading the code package, starting the container, bootstrapping the runtime, and then executing your code. Even the execution of your own code may be faster the second time around if there are elements of your code that are essentially “cached” on the first start. .NET Core is infamous for having one of the slower cold start times of the supported languages offered by AWS Lambda. Ironically, performance is one of the reasons you may actually choose to write your Lambda code in .NET Core. As .NET Core is eventually compiled into platform specific machine code, it will easily out-perfom an interpreted language like python or javascript.

One of the factors that is particularly damaging for .NET Lambda cold start times, is the fact that initially, .NET code is (Just In Time) JIT compiled. This process takes the .NET binary which is in a cross-platform Intermediate Language (IL), and compiles it into platform specific machine code, and then stores it in memory. JIT compilation is done on a method-by-method basis, as and when the application needs it. This process takes some time, but of course if you only ever need to do it once when you first deploy your code on a static server, the time it consumes on any one request very quickly pushes out beyond the 99.99th percentile, and no one really concerns themselves about it. With Lambda’s ephemeral nature as well as its concurrency model, this can easily become a concern for certain workloads.

When execution begins for the first time, there are a significant number of libraries that require JIT compilation. These are compiled on a separate thread on an “as needed” basis. This JIT compilation means that only methods in the executed path of your Lambda are actually compiled. I mean, why bother compiling methods you didn’t actually want to execute, while you are trying to return the requested result to the end user? If a subsequent request causes a significantly different path to be executed, then the JIT compiler is invoked again to perform the necessary once off compilation of the new path. This is what I refer to as a “lukewarm start”. Yes, you have already paid a heavy price for the cold start, and now, you have another request that’s slow as well.

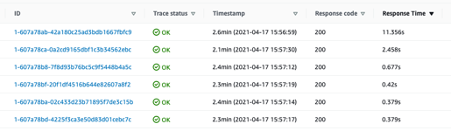

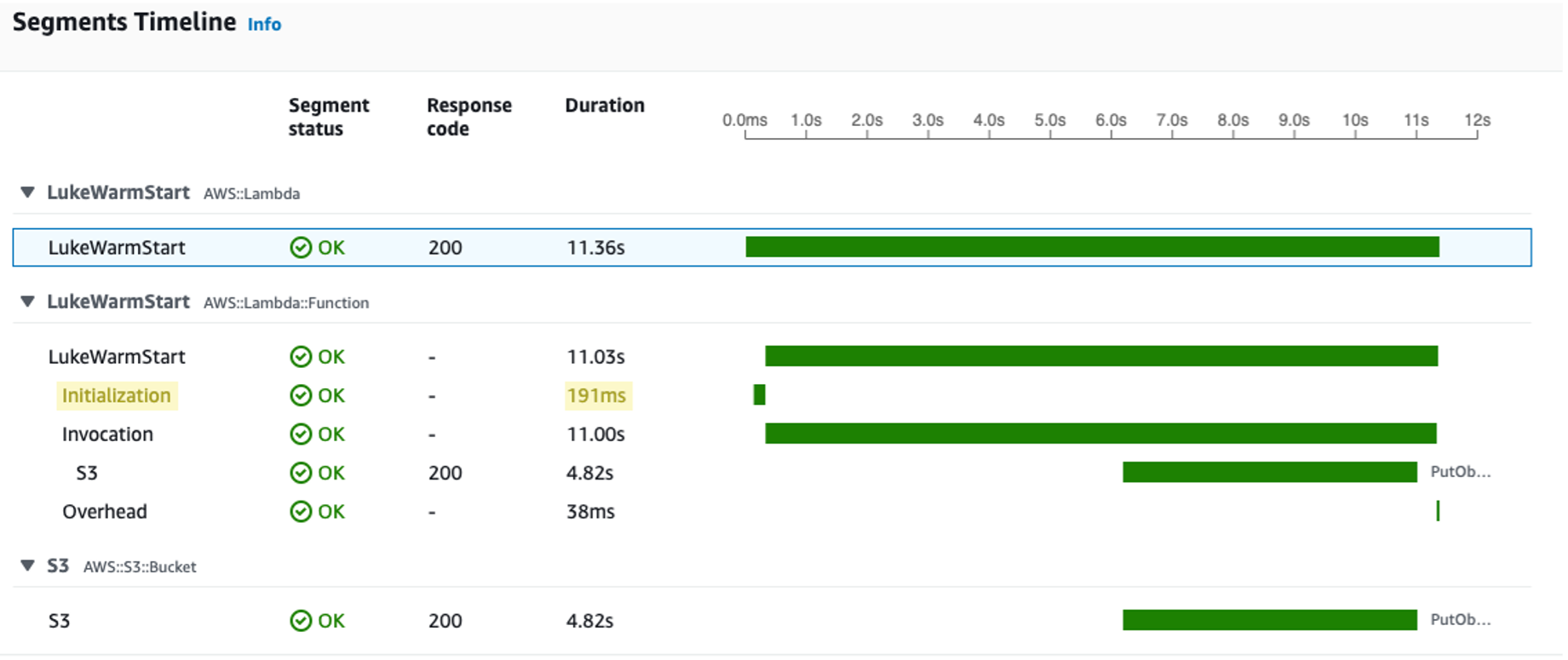

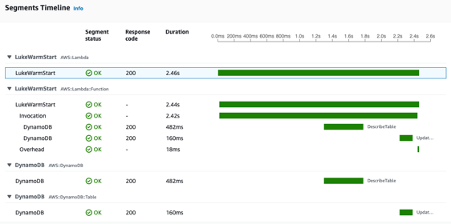

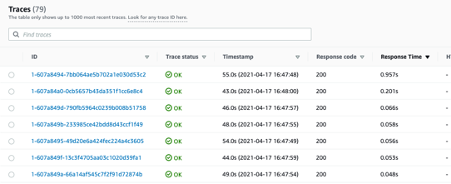

To demonstrate this effect, I have set up a simple Lambda that is called in a loop by a step function 100 times. For the first 50 executions, it writes a message to an S3 bucket, and then it switches to writing the same message to a DynamoDB table. This switch causes a very different set of AWS SDK libraries to require JIT compiling. The X-Ray traces are shown below.

When ordered by Response Time, you can clearly see an 11.4 second request that looks to be the traditional cold start time. Drilling into the trace validates this, as there is a ~200ms “Initialization” phase. It is obvious even at this point that the time taken for JIT compilation dwarfs the Initialization time. AWS have put in a lot of effort to reduce the initialization phase, and continue to push this time down.

Next there is a ~2.5 second trace, which seems odd against the rest of the ~0.6 second or less traces that are recorded for subsequent invocations. In fact, the 95th percentile for this function is 0.23 seconds. Drilling into this trace reveals that there is no “Initialization” phase, just an “Invocation” phase. There is ~0.5 seconds allocated to a DynamoDB “Describe Table” call, which I’ll get to later in this post, but that still leaves close to 1.8 seconds of discrepancy. The bulk of this can again be attributed to JIT compilation.

This is a simple piece of code with only 2 separate code paths. Any useful Lambda is likely to have significantly more code paths and will subsequently hit these kinds of issues more often. This may sound grim, but all is not lost. The good news is that many of the same approaches to alleviating cold start issues also remediate lukewarm starts.

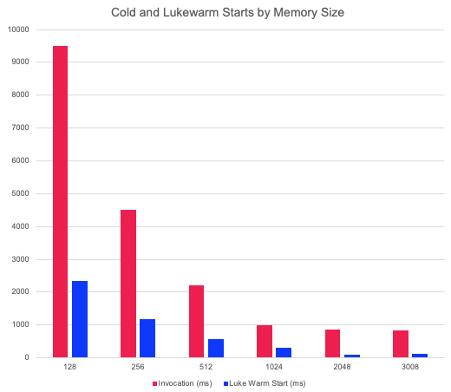

Solution - Memory

The first point to note here is that JIT compilation happens on a separate thread. Increasing the memory of a Lambda will scale the amount of allocated CPU as well. This substantially improves multi-threaded applications. The traces above were deliberately run on a Lambda that has only 128MB of memory to highlight the problem. Keep in mind that many of the default Lambda templates use a memory size of 256MB, which will still give you poor cold start performance. In my experimentation, I have found there is a sweet spot somewhere between 1024MB and 2048MB. Increasing it beyond this doesn’t give you much gain in cold start or lukewarm start times. Of course, this will depend on the workload, so it’s important to test this for yourself.

Of course, increasing the memory size may increase the cost of running your Lambdas. I say “may”, because while the formula for calculating Lambda pricing is proportional to the memory size, it is also proportional to execution time. Even more so now that Lambda is billed to the single millisecond. So, depending on your workload, you may actually see a reduction in cost by choosing a larger memory size for your Lambdas because of the reduced execution time. Given this is dependent on the workload, it’s something you should really investigate. Keep in mind, it's not just JIT compilation that can take advantage of more CPU and memory.

Solutions - Other Options

From .NET Core 3.1 onwards there is an MSBuild parameter called “ReadyToRun”. This instructs the compiler to do some ahead-of-time (AOT) compilation of your code. This can give you serious gains for cold and lukewarm start times. There is (as always) a cost. Not all code paths can be efficiently AOT compiled, and this means that the generated code will be less optimal than code that is truly JIT compiled, so you may need to weigh up the benefit with the warm start cost. It will also make the deployable binary larger, which will have a negaqtive impact on the performance of the initialization phase of a cold start.

Of course, there is standard advice that still rings true. Keep your Lambdas small and focussed. Don’t introduce too many code paths, and don’t add bulky libraries to do what you can do with much simpler code. Well-structured and re-usable code also becomes important when dealing with these issues.

In extreme cases where you must have the lowest possible cold start and lukewarm start times, and you must use .NET core, the solution is to use LambdaNative (https://zaccharles.medium.com/making-net-aws-lambda-functions-start-10x-faster-using-lambdanative-8e53d6f12c9c) and build the application as ReadyToRun and SelfContained. Of course you are then off the supported AWS path, but when the need for speed is all consuming, then one does what one must.

Other First Time Costs

Circling back to the “Describe Tables” call as promised. This is due to the fact that I used a really nice .NET core abstraction called the Object Persistence Model to write the DynamoDB table. This makes writing DynamoDB CRUD logic really fast and natural to someone used to .NET Core. The impact is that as part of this framework, the very first time your code runs, it explicitly calls the “Describe Table” API in order to build its internal model. This model is then stored statically so that future invocations of the Lambda can re-use it. Abstractions like this are great, but you need to be aware of the hidden cost that comes with them. The good news is that this cost decreases as you increase memory size as well. For example, at 2048MB, the “Describe Table” call takes around 50ms as opposed to nearly 500ms for 128MB. If however this cost is still unacceptable for your use case, then it’s important to know there are less expensive abstractions that the .NET Core SDK provides as well as the ability to call the APIs directly yourself. In my experience, the cost of the abstraction is almost always worth the one-off price you pay.

The idea of storing things statically the first time you require them is actually a valuable concept when looking at the overall application performance. It’s important to keep in mind that there are many things such as HttpClients, AWS SDK Clients, etc., that all require some form of initialization, but can then be re-used. If you dispose and re-create these objects on every request, you pay a cost every time, where-as if you do this on start up, then your first call will always be significantly longer than subsequent calls. This might feel like cold start, but it really isn’t, as you would otherwise have to pay that cost on every request. Ideally, you should do this on an as-needed basis, rather than provisioning everything you may need on the first call. That way the cost is distributed over multiple calls, and only paid when/if it’s required.

Conclusion

AWS are working tirelessly to improve their side of the cold start performance issue and continue to make great strides here. Similarly, Microsoft have a vested interest in continually improving not just the JIT compilation, but the general runtime performance of the .NET Core framework. While .NET Core does have some issues with cold start and lukewarm start, ultimately, it is a very high performing choice to write AWS Lambda functions in, and there are ways in which you can remediate these issues.